We needed to animate people in 3D for our projects. One solution would have been to do the animation frame by frame using 3D software such as Blender. But this is extremely time-consuming and doesn't necessarily offer a natural rendering.

Another solution would have been to hire a motion capture studio.

The quality of rendered animations is excellent, but comes with a hefty price tag in terms of operator time and material costs. What's more, if changes are required in the animation, these can easily generate significant costs, requiring the re-preparation of a recording set, with all the attendant expenses.

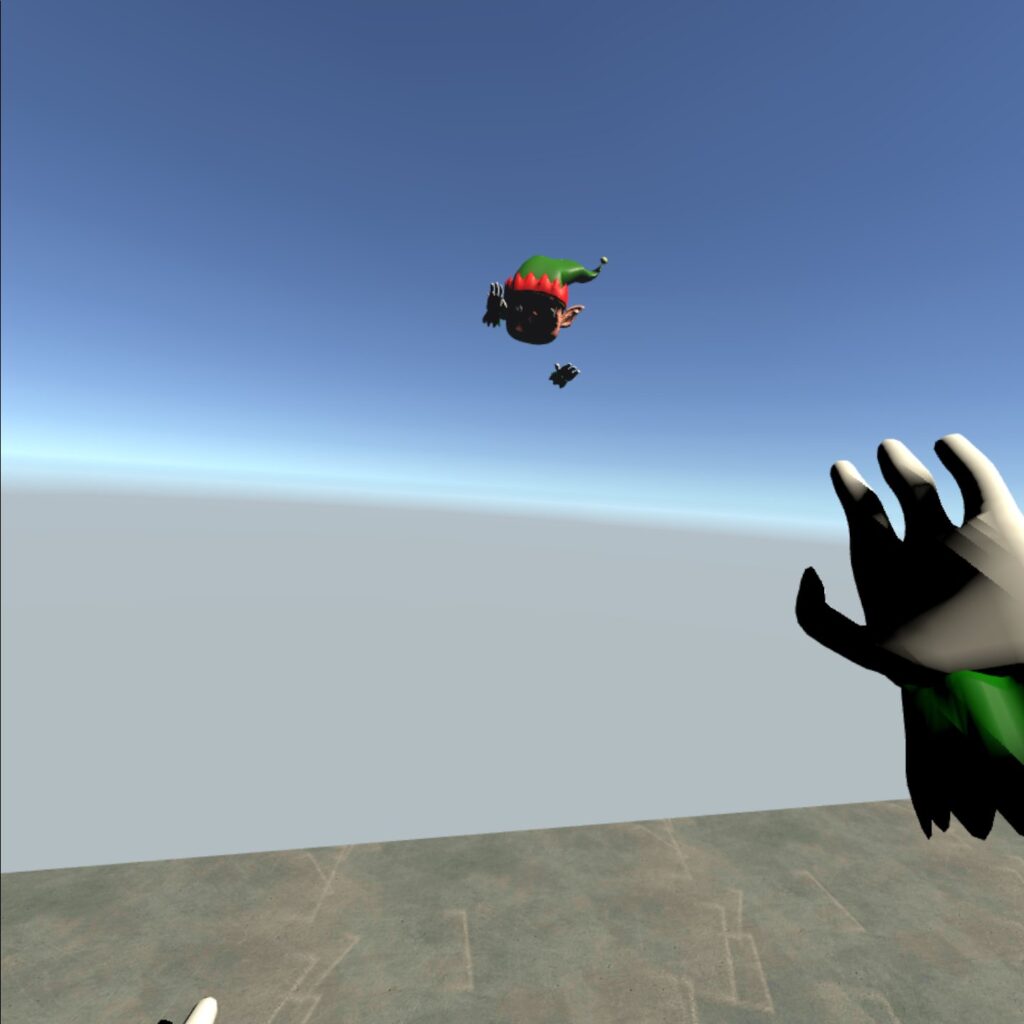

We needed a simple, fast and efficient solution for recording our animations. Total synthesis in Blender and renting a motion capture studio were not adapted to our needs, so we created our own solution: using the Oculus Quest virtual reality headset with its 6-dimensional controllers (6DoF) for motion capture, combined with a headset for voice recording.

And guess what? The result is pretty good!

So here's the solution

Global logic

Our home-made motion capture studio is divided into 3 parts.

- An on-board client application on the Oculus Quest which, when a joystick is clicked, records the position/rotation of the joysticks and headset and sends this telemetric data to a server. NodeJS.

- A server NodeJS with socket.io which handles telemetry transmissions between our Oculus Quest and the editor application.

- An "Editor" application that receives telemetry data from the Oculus Quest and records animations in combination with the audio spectrum of the animator's voice.

For the ocassion, we acquired a good wifi headset (HS70-Corsair) which is directly connected to the computer running the editor. It is also possible to record sound from the Oculus Quest, but an audio signal can be quite heavy, and synchronization problems are avoided by connecting the wireless microphone directly to the editor.

As we used a paid package for server communications, we can't make the code available on GitHub. But here's how we did it, and the code that will allow you to do the same!

The customer on the Oculus Quest

First, for all communication between the client, server and editor, we used socket.io for instant communication.

To implement socket.io in the Unity editor and client, we preferred to use an excellent pay-as-you-go package: BestHTTP Pro

https://assetstore.unity.com/packages/tools/network/best-http-10872

Using this package, you can easily implement socket.io and concentrate on other tasks. There's enough to develop (;

As for the code on our client application, i.e. the on-board application on the Oculus Quest, it's very simple. A simple Unity project where we just record the position/rotation of the controllers and head, and send it all via socket.io to our server NodeJS through a single script.

The "socketClient.cs" code:

using BestHTTP.SocketIO;

using System;

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

[Serializable]

public class telemetry_packet

{

public TF left_hand;

public TF right_hand;

public TF head;

}

[Serializable]

public class TF

{

public Vector3 position;

public Quaternion rotation;

public TF(Transform t)

{

this.position = t.localPosition;

this.rotation = t.localRotation;

}

}

public class socketClient : MonoBehaviour

{

SocketManager Manager;

public const string localSocketUrl = "http://localhost:7000/socket.io/";

public const string webSocketUrl = "http://yourInternetEndPoint:7000/socket.io/";

telemetry_packet tp;

public GameObject right_hand;

public GameObject left_hand;

public GameObject head;

// Start is called before the first frame update

void Start()

{

timeFrame = 1f / fps;

timeTelemetry = timeFrame;

SocketOptions options = new SocketOptions();

options.AutoConnect = false;

options.ConnectWith = BestHTTP.SocketIO.Transports.TransportTypes.WebSocket;

Manager = new SocketManager(new Uri(webSocketUrl), options);

Manager.Socket.On("connect", OnConnect);

Manager.Socket.On("disconnect", OnDisconnect);

Manager.Open();

}

bool connect = false;

bool record = false;

void OnConnect(Socket socket, Packet packet, params object[] args)

{

tp = new telemetry_packet();

connect = true;

Debug.Log("connected");

}

void OnDisconnect(Socket socket, Packet packet, params object[] args)

{

connect = false;

// args[0] is the nick of the sender

// args[1] is the message

Debug.Log("disconnected");

}

bool click = false;

float time = 0f;

float fps = 60;

float timeFrame = 0f;

float timeTelemetry = 0f;

public Material skybox;

IEnumerator Tele ()

{

tp.head = new TF(head.transform);

tp.right_hand = new TF(right_hand.transform);

tp.left_hand = new TF(left_hand.transform);

string json = JsonUtility.ToJson(tp);

yield return null;

Manager.Socket.Emit("telemetry", json);

yield return null;

}

// Update is called once per frame

void FixedUpdate()

{

if (Input.GetKeyUp(KeyCode.R) || OVRInput.Get(OVRInput.Button.One))

{

if (!click)

{

time = 0f;

click = true;

if (record)

{

skybox.SetColor("_SkyTint", Color.white);

record = false;

}

else

{

skybox.SetColor("_SkyTint", Color.red);

record = true;

}

if (connect)

{

Manager.Socket.Emit("setrecording", record);

}

}

}

else

{

time += Time.deltaTime;

if (time > 1f)

{

click = false;

}

}

if (connect)

{

timeTelemetry -= Time.deltaTime;

if (timeTelemetry < 0f)

{

timeTelemetry = timeFrame;

StartCoroutine("Tele");

}

}

}

void OnDestroy()

{

skybox.SetColor("_SkyTint", Color.white);

// Leaving this sample, close the socket

if (Manager != null)

{

Manager.Close();

}

}

}The server

It's pretty straightforward here too. We just want to receive the telemetry data from our customer and transmit it to our editor. To do this, we use NodeJS with the "express", "fs" and "socket.io" libraries, which you can simply install with NPM.

Use npm install + express, fs, socket.io.

To host our server, we used Heroku. This service lets you host small services free of charge node.js.

The "server.js" code :

var express = require("express");

var fs = require('fs');

var app = new express();

var http = require("http").Server(app);

var io = require("socket.io")(http);

app.use(express.static(__dirname + "/public" ));

app.get('/',function(req,res){

res.redirect('index.html');

});

io.sockets.on('connection', function (socket) {

console.log('Someone is connected');

socket.on('setrecording',function(value){

console.log('Recording change to '+value);

io.emit('recording', value);

});

socket.on('telemetry',function(json){

io.emit('telemetry', json);

});

socket.on('disconnect', function() {

console.log('Disconnected');

io.emit('clientdisconnected', true);

});

});

console.log('Server is correctly running for the moment :-)');

http.listen(7000,function(){

console.log("Server running at port "+ 7000);

});

The publisher

This is the most complicated part. First, you need to create a new Unity project.

To record the audio coming from our headset, we used the "DarkTable" project entitled "SavWav".

Why remake the wheel if it already exists?

https://gist.github.com/darktable/2317063

Then the first thing we need to do is retrieve the telemetry data from our server.

The "socketMaster.cs" code:

using BestHTTP.SocketIO;

using System;

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

[Serializable]

public class telemetry_packet

{

public TF left_hand;

public TF right_hand;

public TF head;

}

[Serializable]

public class TF

{

public Vector3 position;

public Quaternion rotation;

public TF(Transform t)

{

this.position = t.position;

this.rotation = t.rotation;

}

}

public class socketMaster : MonoBehaviour

{

SocketManager Manager;

Follower follow;

AnimMicroRecorder amr;

public const string localSocketUrl = "http://localhost:7000/socket.io/";

public const string webSocketUrl = "http://yourEndPoint:7000/socket.io/";

// Start is called before the first frame update

void Start()

{

SocketOptions options = new SocketOptions();

options.AutoConnect = false;

options.ConnectWith = BestHTTP.SocketIO.Transports.TransportTypes.WebSocket;

Debug.Log(new Uri(webSocketUrl));

Manager = new SocketManager(new Uri(webSocketUrl), options);

Manager.Socket.On("connect", OnConnect);

Manager.Socket.On("disconnect", OnDisconnect);

Manager.Socket.On("telemetry", Telemetry);

Manager.Socket.On("recording", OnStatusChange);

Manager.Open();

follow = GameObject.FindObjectOfType();

amr = GameObject.FindObjectOfType();

}

void OnConnect(Socket socket, Packet packet, params object[] args)

{

Debug.Log("connected");

}

void OnDisconnect(Socket socket, Packet packet, params object[] args)

{

// args[0] is the nick of the sender

// args[1] is the message

Debug.Log("disconnected");

}

void Telemetry(Socket socket, Packet packet, params object[] args)

{

// args[0] is the nick of the sender

// args[1] is the message

string data = (string)args[0];

telemetry_packet tp = JsonUtility.FromJson(data);

if (follow.tp == null)

{

follow.tp = tp;

follow.setHeadOriginal();

}

else

{

follow.tp = tp;

}

}

void OnStatusChange(Socket socket, Packet packet, params object[] args)

{

bool record = (bool)args[0];

// args[0] is the nick of the sender

// args[1] is the message

Debug.Log("OnStatusChange "+ record);

if (record)

{

follow.record = true;

follow.setHeadOriginal();

amr.startRecording();

}

else

{

amr.stopRecording();

follow.record = false;

}

}

void OnDestroy()

{

// Leaving this sample, close the socket

if (Manager != null)

{

Manager.Close();

}

}

// Update is called once per frame

void Update()

{

}

}

When we receive JSON data from the server, we decompress it into a class and send them to the "Follower" object, which imitates the movements of the "Client".

When we receive the telemetry data in JSON from our server, we unpack it into a class and send it to an object called "Follower". The Follower object will mimic exactly the same positional and rotational movements that we record on the "Client" side.

The "Follower.cs" code:

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

public class Follower : MonoBehaviour

{

[HideInInspector]

public telemetry_packet tp;

public Transform root;

TF original_pos;

// Start is called before the first frame update

void Start()

{

original_pos = new TF(root);

}

public GameObject right_hand;

public GameObject left_hand;

public GameObject head;

public bool record = false;

Vector3 originalHeadPosition = Vector3.zero;

public void setHeadOriginal ()

{

if (tp != null)

{

originalHeadPosition = tp.head.position;

}

}

// Update is called once per frame

void FixedUpdate()

{

if (tp != null)

{

Vector3 right = tp.right_hand.position - originalHeadPosition;

//right.y = tp.right_hand.position.y;

right_hand.transform.localPosition = right;

Vector3 left = tp.left_hand.position - originalHeadPosition;

//left.y = tp.left_hand.position.y;

left_hand.transform.localPosition = left;

head.transform.localPosition = tp.head.position - originalHeadPosition;

right_hand.transform.rotation = tp.right_hand.rotation;

left_hand.transform.rotation = tp.left_hand.rotation;

head.transform.rotation = tp.head.rotation;

}

}

}

Now, when a controller moves on our client, it also moves in our editor, so all we have to do is save the animation and sound. To record the animation, we'll use an editor function available in Unity called "GameObjectRecorder", which allows animation to be recorded natively. To record the sound, we'll use the "SavWav" project from DarkTable, linked above.

AnimMicroRecorder.cs" code :

using System.Collections;

using System.Collections.Generic;

using UnityEditor;

using UnityEditor.Animations;

using UnityEngine;

public class AnimMicroRecorder : MonoBehaviour

{

private AnimationClip clip;

private GameObjectRecorder m_Recorder;

public GameObject root_target;

AudioClip _RecordingClip;

int _SampleRate = 44100; // Audio sample rate

int _MaxClipLength = 300; // Maximum length of audio recording

public float _TrimCutoff = .01f; // Minimum volume of the clip before it gets trimmed

public Material mat_skybox;

// Start is called before the first frame update

void Start()

{

}

float timeRecord = 0f;

bool record = false;

float timefps = 0f;

public void startRecording ()

{

mat_skybox.SetColor("_SkyTint", Color.red);

Debug.Log("Start Recording");

clip = new AnimationClip();

m_Recorder = new GameObjectRecorder(root_target);

m_Recorder.BindComponentsOfType(root_target, true);

timeRecord = 0f;

timefps = 1f / fps;

timeFrame = 0f;

record = true;

if (Microphone.devices.Length > 0)

{

_RecordingClip = Microphone.Start("", true, _MaxClipLength, _SampleRate);

}

}

int number = 1;

public void stopRecording ()

{

mat_skybox.SetColor("_SkyTint", Color.white);

Debug.Log("Stop Recording");

record = false;

m_Recorder.SaveToClip(clip, 30f);

string fileName = System.DateTime.Now.ToString("dd-hh-mm-ss");

string name = fileName + ".anim";

AssetDatabase.CreateAsset(clip, "Assets/Resources/Recordings/Animations/"+ name);

AssetDatabase.SaveAssets();

if (Microphone.devices.Length > 0)

{

Microphone.End("");

var samples = new float[_RecordingClip.samples];

_RecordingClip.GetData(samples, 0);

List list_samples = new List(samples);

int numberOfSamples = (int)(timeRecord * (float)_SampleRate);

list_samples.RemoveRange(numberOfSamples, list_samples.Count - numberOfSamples);

var tempclip = AudioClip.Create("TempClip", list_samples.Count, _RecordingClip.channels, _RecordingClip.frequency, false, false);

tempclip.SetData(list_samples.ToArray(), 0);

string path = Application.dataPath + "\Resources\Recordings\\Animations\\" + fileName;

SavWav.Save(path, tempclip);

}

}

float fps = 30f;

float timeFrame = 0f;

// Update is called once per frame

void FixedUpdate()

{

if (Input.GetKeyDown(KeyCode.R))

{

if (record)

{

stopRecording();

}

else

{

startRecording();

}

}

if (record)

{

timeRecord += Time.deltaTime;

timeFrame += Time.deltaTime;

timefps -= Time.deltaTime;

if (timefps < 0f)

{

//Debug.Log("fps");

m_Recorder.TakeSnapshot(timeFrame);

timefps = 1f / fps;

timeFrame = 0f;

}

}

// Take a snapshot and record all the bindings values for this frame.

}

void OnDestroy()

{

mat_skybox.SetColor("_SkyTint", Color.white);

}

}

Conclusion

In conclusion, you now have all the pieces you need to set up your own motion capture studio with an Oculus Quest and a headset with microphone-that's magic. Our only limit now is our imagination!

To take things a step further, you can animate your character's mouth with a package called "Salsa Sync", also available in the Unity Asset Store. Easy to use and visually realistic! To record the demo video, we used the Unity package called "Unity Recorder". You can find it in the Unity Package Manager.

We look forward to hearing from you. If you'd like to add improvements to this project and/or record animations, please send us your results.

![Augmented reality - AR - Métro Kairo[s] artwork](https://charlyhayoz.ch/wp-content/uploads/2020/04/newsletter-1-300x200.jpg)